The recent Twitter takeover is a perfect example of why open-source intelligence (OSINT) is a critical discipline for analysts. It shows that technology and tools are always in beta. It also illustrates that you can always expect change and reassures the growing demand for a discipline like OSINT.

Regardless, publicly available information on Twitter will look a lot different in the Elon Musk era. Eliot Higgins from Bellingcat summarizes it well in his tweet:

Musk may have been slow to purchase Twitter, but he is proving changes are quick now that the purchase has been finalized. In this brief, Overwatch analysts hone in on a change that has shaken up the Twitterverse and could have significant impacts for how OSINT analysts discover, collect and vet publicly available information found on the platform. That change? Charging for the iconic blue checkmark.

Earlier this week, we polled our LinkedIn community for a temperature check on sentiment regarding the new Twitter regime. Here are the results:

Chasing Clout Behind the Blue Checkmark

The blue checkmark was never conceived of as a signifier of importance on the platform, but according to the Twitter support team in 2017, it has become one. One article, for example, notes that in the early days of verification in 2013, Twitter rolled out a filter for verified accounts that helped them connect and view information shared by other verified users. A second article also notes that even Musk’s interactions on Twitter trend towards those with blue checkmarks. In 2022, 57% of Musk’s interactions on the platform were with verified users. On Twitter, or any social media platform, it is one thing to garner engagement and another to receive validation by, from or with an influencer. In this case, blessed by the blue checkmark.

As of 4:40 p.m. EST on Wednesday, November 9, 2022, Twitter defines the blue checkmark as:

With the addition of the Twitter Blue subscription service where users pay $8 for Twitter validation, the simple icon of a blue checkmark becomes a greater point of confusion on what it is defined on Twitter’s website, the community and among those who hold a blue checkmark and those who do not.

Because If Everyone Is Verified, No One is Verified

The Twitter Blue verification has not even rolled out yet, but the likelihood that implementation is already set. Already Musk has taken to Twitter, proposing what Twitter Blue verification would look like, only to redact it 24 hours later.

New verification initiatives suggested by Musk have many potential consequences for OSINT, both in terms of whose voices are easily accessible on the platform and the sincerity of the message those voices are putting out. Much of this will be determined by how verification is conducted.

Which Voices Will ‘Twitter Trends’ Favor

While Musk may try to change this perception and “empower the voice of the people,” that does not mean previous perceptions of what the blue checkmark means will change overnight. This leftover impression of the blue checkmark as an indicator of truth and, therefore, worthy of inclusion in the public discourse, can lead to issues as verification expands to the population at large.

Verifying questionable accounts in this climate is a source of potential concern. This was already an issue before Musk’s takeover of Twitter. In 2017, for example, Twitter halted verification after the platform was found to be verifying accounts belonging to white supremacists. Additionally, in 2021, Twitter was found to be giving Blue Checkmarks to fake accounts. However, with Twitter Blue being rolled out, we can already see specific individuals like QAnon John, a conspiracy theorist, attempting to pay for verification. It is likely more will follow, and while the argument for freedom of expression is strong, it is undeniable that it will take time for the blue checkmark to change in the popular zeitgeist from a trusted source of information to a simple receipt of purchase.

The second issue that arises depending on the verification method is that bot farms, some made of actual humans, will not only be able to be active on the platform but will receive the added benefit of verification and, therefore, legitimacy. While fully digital bots may be a thing of the past, human-run accounts participating in information operations will easily be able to pay eight dollars a month for this added benefit. Additionally, these accounts will be favored by the algorithm once verified, at least according to Musk, who spoke about the favored treatment verified accounts will receive in terms of positioning relative to non-Twitter Blue accounts.

The second issue that arises depending on the verification method is that bot farms, some made of actual humans, will not only be able to be active on the platform but will receive the added benefit of verification and, therefore, legitimacy. While fully digital bots may be a thing of the past, human-run accounts participating in information operations will easily be able to pay eight dollars a month for this added benefit. Additionally, these accounts will be favored by the algorithm once verified, at least according to Musk, who spoke about the favored treatment verified accounts will receive in terms of positioning relative to non-Twitter Blue accounts.

This new verification system also threatens the voices of certain individuals, everyday Twitter users and those who, due to safety or desire to express their opinions freely, may wish to operate anonymously. These compose two sources of information that are highly valuable for OSINT analysts.

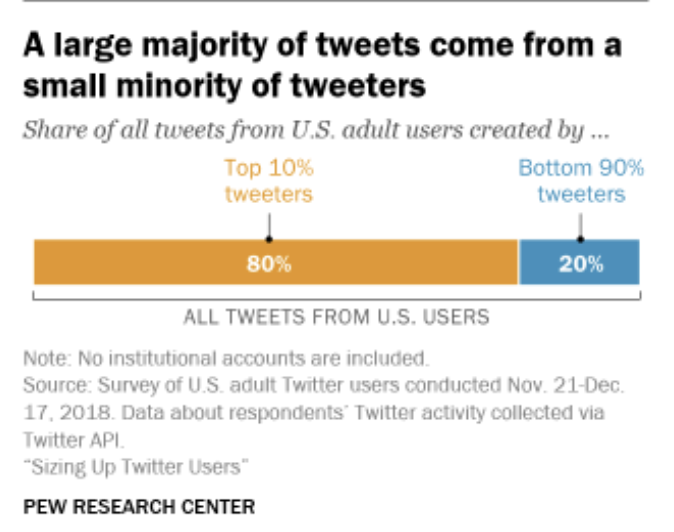

A look at polls conducted by Pew Research Center in 2019 highlight a few noteworthy statistics regarding Twitter users. The top 10% of Twitter users are responsible for 90% of tweets. Additionally, the bottom 90% of Twitter users Tweet on average twice a month. Additionally, research by Scientific American, published in 2015, found that lower-income individuals in the United States used Twitter to communicate socially, while wealthier individuals used it as a place to disseminate information.

As price becomes a barrier to entry, we may lose the ability to gauge the sentiment of the bottom 90% of people using Twitter or those who use Twitter to communicate socially. This, in effect, will create an echo chamber of wealthier, primarily liberal, politically driven Twitter users. While this could be useful if your goal is to gauge this audience, it becomes less useful to an OSINT analyst if they wish to gauge this second more representative audience.

A Subscription Model Muffles Voices Even Further

According to our poll, 56% predict more dis-/misinformation in this new age of Twitter. This is a problem that prevails on Twitter already, and artificial intelligence and machine learning can only moderate to a degree. Humans and labor force are required, although one immediate action Musk took shortly after the news sunk in was laying off half of the Twitter workforce. Yoel Roth, head of safety and integrity at Twitter, reassured that the mass layoff did not impact front-line review.

Quick to retract and rehire some employees that were fired, hopefully this will slow down some other proposed changes Musk has tweeted about. First, verification. The blue checkmark: an amorphous icon that individuals associate with clout and/or validation. The most popular request may now come with a price tag.

Quick to retract and rehire some employees that were fired, hopefully this will slow down some other proposed changes Musk has tweeted about. First, verification. The blue checkmark: an amorphous icon that individuals associate with clout and/or validation. The most popular request may now come with a price tag.

The majority of Twitter users, 90%, are passive observers, listening to the 10% of active users with occasional activity. With the new subscription model, this minority voice will be pushed down further and analysts could see the migration to other social media platforms. Twitter will still serve as an indicator of current trends and views through watching its ripples and evolve into more virulent or inciteful rhetoric across the social/digital space.

Gone Are The Days of Anonymity

The potential loss of anonymous posting through this system is also under threat. Despite Musk’s assurances that “A balance must be struck” between anonymity and authentication, analysts have seen no indication that there is room for anonymity on his new version of Twitter. This potential loss of anonymity poses two main problems for the open-source community.

The first is that those who post anonymously often more freely express their genuine opinion or thoughts. While this may lead to trolling or inflammatory speech, the lack of a language filter also allows OSINTERs to more accurately gauge the sentiment of an individual or group of accounts. If verification ties content to a real person, we risk people filtering themselves and losing out on genuine reactions and feelings about specific topics.

The second and more consequential effect of a loss of anonymity will come from a loss of content coming from less permissive environments. Tweets about protests, dissatisfaction with the government, or even critical information from war zones are often sent out anonymously to protect the posters from retribution. Countries like Myanmar, Saudi Arabia, Turkey, India, China, etc., often arrest individuals for criticizing the government on Twitter. This goes for journalists, activists, protesters, and supporters of opposition parties. If the identity of those pushing out information from these places is no longer shielded by anonymity, we will no longer see tweets sharing information about these countries.

Even if Musk can marry the paradoxical concepts of anonymity and verification, Musk’s takeover of Twitter has relied on investors from several countries such as China, Saudi Arabia, The United Arab Emirates, and Qatar. It is unknown at this point what level of influence these investors will have in Twitter’s operations and what sway they will have over Musk in general. This puts anonymous tweets from these countries at risk.

The push to “democratize” verification on Twitter comes with several challenges and potential pitfalls, not only analysts, but for the platform. The success with which these pitfalls can be avoided will be a product of how they are implemented. However, with the seeming speed with which Musk wants these new features to become available, unintended consequences will be unavoidable.

Much discussion had been given to the potential impacts to the easement of moderation policies on curbing mis- and disinformation. Current social media platforms such as Twitter’s reliance on metrics of engagement and activity as an underlying part of their business model will be difficult for them to address. The fact remains that inflammatory and derisive language drives stronger responses and activity by a smaller but vocal segment of the Twitterverse. Easement of moderation rules would allow the platforms to sustain the most important driver of current metrics. Enactment of moderation policies requires labor and overhead costs that work inversely of the underlying business drivers. As previously referenced, the responses are coming from 10% of users with the remaining 90% remaining quiet on current social media platforms such as Twitter. In addition, the boisterous 10% will pay for the subscription as their activity is tied to their own pursuits.

As noted by New York University Thomas Cooley Professor of Ethical Leadership Jonathan Haidt, that far left and right fringes of our society numbers 7-8%, but social media platforms metrics and their exuberance produces a disproportionate impact on social discourse, debate and activity or lack thereof in social media space and in greater society in general. He defined this impact in a recent 60 Minutes segment as “Structural Stupidity,” where organizations or spaces populated by smart and thoughtful people are placed in situations where dissent is severely punished. They then go dormant which limits critical debate and critique of the dominant or prevailing opinion or view. This could be very true of Twitter at present.

Our Assessment

As we examine the impacts of Twitter’s moderation easement policies on curbing mis- and disinformation, the aforementioned metrics driving engagement and activity will likely have minimal impact on curbing the growth of mis- and disinformation. While the measure may, to some degree, better identify the sources of disinformation and designate them properly, it will not significantly reduce the spread of misinformation for a few reasons.

Fact checking remains a critical piece of combating misinformation but must compete in the current models where it loses out in dissemination to the public. Even with technological advances like machine learning and artificial intelligence, the endeavor to identify misinformation is labor intensive. Le Monde’s Adrien Sénécat covered for Décodeurs, a study of the 2018 French elections and what the spread of misinformation on Twitter looks like, noted that fact checks of misinformation get approximately four times fewer shares than the original falsehoods. Twitter’s moderation easement and layoffs will not address this underlying aspect.

Disinformation and misinformation compose to varying degrees of importance in the OSINT analysts to identify and assess threats and vulnerabilities. Understanding of the impacts of Twitter’s changes over the last few weeks do not make Twitter any less important of an OSINT tool; it requires exercising additional critical thinking. Unless bolder systemic changes are made, it will remain a place where we see actors who benefit from promoting inflammatory rhetoric, misinformation, and disinformation to further an agenda. It is unlikely they will be the major instrument of action for threats as it would undercut their pursuits.

Twitter will be a platform where those ripples begin. For analysts it will be important to track the resonance of those ideas and personalities across more ideological affiliated platforms throughout the stratified web. The growth of memberships in these platforms will be important as people in the 90% migrate away from paying subscription fees as well as aligning themselves with groups they identify with, which is a very human thing to do. Since joining Twitter in 2014, their success aligns with Musk’s. And much like other Twitter influencers, Musk learned the formula of success to be “heard” or retweeted in the Twittersphere: tweet often and tweet loudly. The more outlandish, the more engagement.